Blog

Appwrite vs Supabase – Which backend solution is best for you? Cloud vs Self-Hosted performance comparison

This comprehensive comparison evaluates the performance, scalability, and ease of use between Appwrite and Supabase.

In this article, you will read about:

- Appwrite performance: How fast Appwrite handles eCommerce simulation

- Supabase performance: How fast Supabase performs under the same eCommerce simulation

- Cloud vs Self-Hosted: How self-hosted instances can outperform cloud options, offering better control and performance for various use cases

- Flexibility: Which platform, Supabase or Appwrite, is better suited for your specific needs, based on performance, flexibility and ease of use

- Cost-effective scaling: How many users a €5-30 server can support for both Supabase and Appwrite self-hosted configurations

By the end, you’ll have a clear understanding of which solution, Supabase or Appwrite, will help you better bootstrap your MVP or scale and perform optimally, as well as when to choose cloud hosting versus setting up your own instance for maximum flexibility and performance.

Additionally, I include code repository with detailed results, so you can rerun the same scenarios and easily modify them to fit your specific use case.

Results may surprise you.

No one’s slipping us any cash for this—the article is sponsored by Codigee only!

Motiviation

The idea behind this comparison is simple: I wanted to see how Supabase and Appwrite perform, as well as their self-hosted instances on a budget-friendly setup.

Looking for cost-effective solutions to power apps, websites or additional infrastructure, I decided to test these platforms on affordable hardware. My objective is to understand how well these backend services handle typical eCommerce user simulation and whether they can deliver reliable performance at a lower price. This way, I can figure out if it’s possible to bootstrap a project on a budget without sacrificing performance or scalability.

I’m also a little tired of everyone pushing expensive and complicated AWS setups that end up pulling you into complex cost structures, easily exceeding €100 per month. That’s why I’m giving you a solution that offers decent performance for a fraction of the cost—max €30 per month. It’s all about getting the most out of self-hosting without breaking the bank.

Tools

- Appwrite: A backend platform to handle authentication, databases, and more, available in both cloud and self-hosted setups

- Supabase: A popular alternative to Firebase, also available in both cloud and self-hosted setups

- Grafana k6: A powerful tool for load testing, helping measure how well these platforms handle simulated traffic

- Bun and ElysiaJS: I’ll be using these to expose APIs and Appwrite/Supabase SDKs, providing the interface through which the tests can be conducted

- Mocked database: The setup includes a database with 25,000 product entries and 5,000 user entries

They all are open-source not only providing powerful functionality but also having great communities of users for support and development.

ElysiaJS is said to be 21x faster than Express.

Hosting

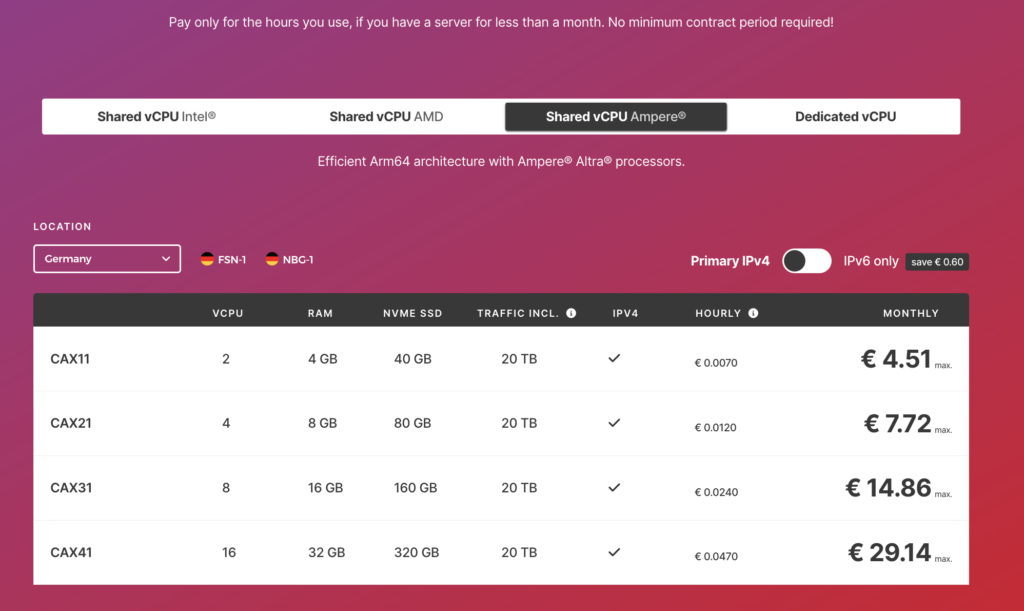

Hetzner Ampere vCPUs

For the self-hosting portion of this comparison, I’m using Hetzner Cloud Shared vCPUs. These are affordable cloud machines that you pay for based on minutes of usage, with a maximum monthly limit. It means no matter what you do on server you won’t exceed limit.

The servers I’m using are built on Ampere architecture, which is known for its energy efficiency, running Ubuntu as the operating system.

For the cloud testing, I’m sticking strictly to free-tier accounts for both Supabase and Appwrite, staying within their usage limits to simulate the most cost-effective setup. What’s interesting is that when you’re creating instances in the cloud, you can choose similar locations to those provided by Hetzner.

All pricing mentioned in this article is valid as of September 25, 2024

Test scenarios

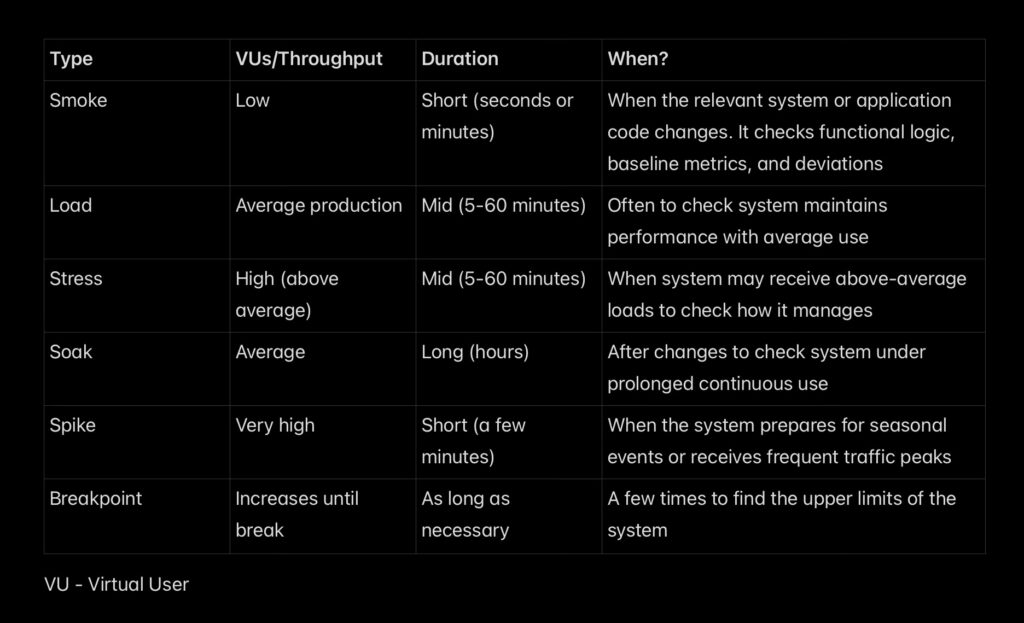

Test types

For each Hetzner instance, I will be testing to find the breakpoint, the point at which the server can no longer handle the load. After identifying that, I will run load test and determine the maximum comfort zone where the server can operate while maintaining industry-standard response times. This process will be repeated for each server instance.

To ensure accurate results, I will clear the cache and storage before each test, making sure that only one app instance is running at a time.

Ecommerce simulation

To simulate basic user behavior I will install either Appwrite or Supabase, along with the respective API client for each. I won’t tweak the tools in any way, as the goal is to evaluate their out-of-the-box performance, not artificially boost benchmarks by fine-tuning. The idea is to see how these platforms perform under normal, everyday conditions.

Next, I will import two tables into the server’s database:

- 5,000 users, with fields such as name, address, and phone number

- 20,000 products, with fields including details, number, and image URL

To simulate an eCommerce user, I’ll implement a Virtual User (VU) instance. The VU will follow this sequence:

- GET /user: Query the users table to find a match based on an exact name and return the corresponding row

- Immediately after receiving the user response, the VU will call:

- GET /products: Query the products table using the following steps:

- Match fields like name and image

- Order the results in ascending order

- Limit the query to return a specific number of products

Once the product data is received, the VU will wait 1 second and repeat the entire process.

This setup simulates a common eCommerce flow, where a user logs in, retrieves their details, and fetches filtered product data for display on the home screen. It’s important to note that this test focuses on raw backend performance. In real-world applications, client-side caching would typically reduce load, but we are excluding that here to stress-test the backend’s full capability.

Additionally, the 1-second loop is intentionally aggressive, continuously querying the server, which isn’t typical in user behavior. Normally, after login and fetching data, users would interact with the screen for a few seconds before making further requests. In this case, the constant querying represents a heavy load scenario, further pushing the limits of each platform’s performance.

Breakpoint testing

For breakpoint testing, I will gradually increase the number of VU instances over time to observe how the server performs under increasing load using ramping executor. The test starts with 1 VU for 30 seconds to give the server warm-up time, and then I begin increasing the load gradually, from 1 to 50 VUs over 30 seconds, followed by increments up to 500 VUs and beyond.

By the end of the test, the goal is to have 10,000 VUs calling both endpoints in a continuous 1-second loop.

The objective of this test is to observe how the server responds to an increasing number of concurrent users, determining the breaking point—the number of users the server can handle before it starts experiencing timeouts, errors, or crashes. This will give a clear indication of each platform’s capacity for scaling.

export const options = {

scenarios: {

breakpoint: {

executor: "ramping-vus",

startVUs: 1,

stages: [

{ duration: "30s", target: 1 }, // Warm-up

{ duration: "30s", target: 50 },

{ duration: "30s", target: 500 },

{ duration: "30s", target: 1000 },

{ duration: "30s", target: 2000 },

{ duration: "30s", target: 3000 },

{ duration: "30s", target: 5000 },

{ duration: "30s", target: 10000 },

],

gracefulRampDown: "1s",

},

},

};

export default () => {

const user = http.get(URL_USER);

const products = http.get(URL_PRODUCTS);

sleep(1);

};

Finding comfort zone

To identify the comfortable number of users a server can handle while maintaining industry-standard performance, I will run a load test with a constant number of VUs over a set period of time. The test will only pass if the server responses meet the following conditions:

- 99% of requests are returned in under 1000ms

- 95% of requests are returned in under 500ms

- 90% of requests are returned in under 200ms

- 99% of responses are successful (return 200 OK)

These criteria ensure that the server operates within the performance thresholds typically expected in the industry. If these thresholds are not met, the test will fail, indicating that the server cannot handle the specified load comfortably.

const VUS = 100;

export const options = {

scenarios: {

load_3rules: {

executor: "constant-vus",

vus: VUS,

duration: "60s",

},

},

thresholds: {

http_req_duration: ["p(99)<1000"],

http_req_duration: ["p(95)<500"],

http_req_duration: ["p(90)<200"],

http_req_failed: ["rate<0.01"],

},

};

export default () => {

const user = http.get(URL_USER);

const products = http.get(URL_PRODUCTS);

sleep(1);};

Test sequence

To ensure proper and consistent results during backend performance testing, I will follow this exact sequence for both Appwrite and Supabase.

start testing for instance

enable clean CAX11

install Appwrite

install API client for Appwrite

run the breakpoint test

run the load test

clean and clear the server

install Supabase

install the API client for Supabase

run the breakpoint test

run the load testend testing for instance

Once both platforms have been tested on the CAX11 instance, I will increase the server size to a Hetzner CAX21 instance and repeat the entire process to compare how scaling server resources impacts the performance of both Appwrite and Supabase under similar conditions.

Assumptions

- The server is located in Germany and I am approximately 1,500 km away

- I am using a 5G/4G LTE mobile network to connect to the server, simulating a more natural flow for mobile apps, as most end users will access the backend through mobile networks rather than a high-speed fiber connection

- The tool used for load testing is fully capable of performing these tests. The scale of the test, with up to 10 000 VUs is well within the capabilities of k6 and is not considered a massive load for this tool.

Due to varying network conditions, test are not 100% deterministic and that’s just the nature of real-world testing. Factors like network fluctuations can introduce some variability in response times. However, the results provide a realistic view of how these platforms would perform in typical usage scenarios. This approach ensures that we capture the natural flow of mobile apps interacting with a backend, even if some slight inconsistencies occur.

Results

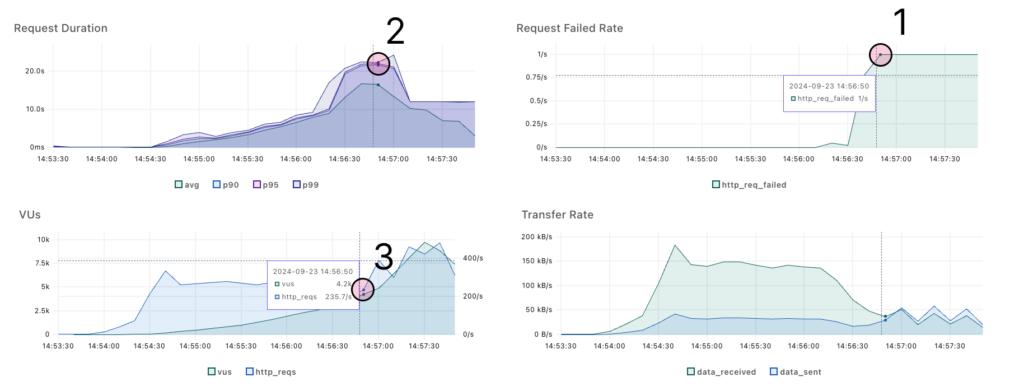

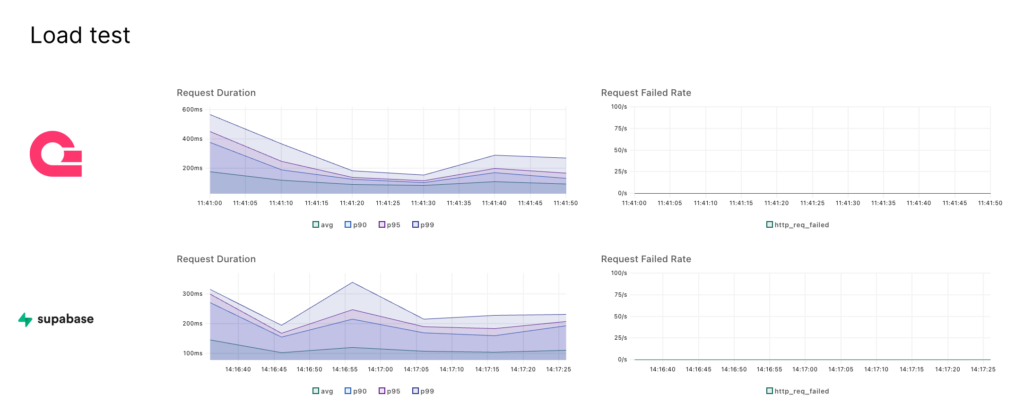

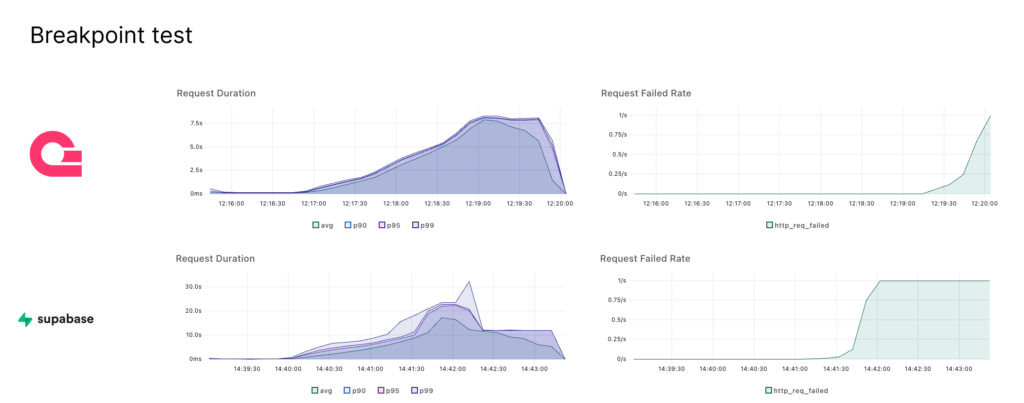

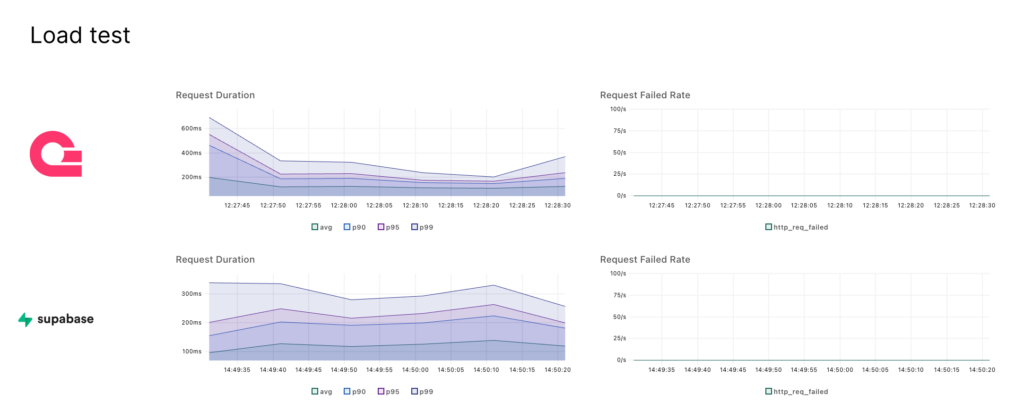

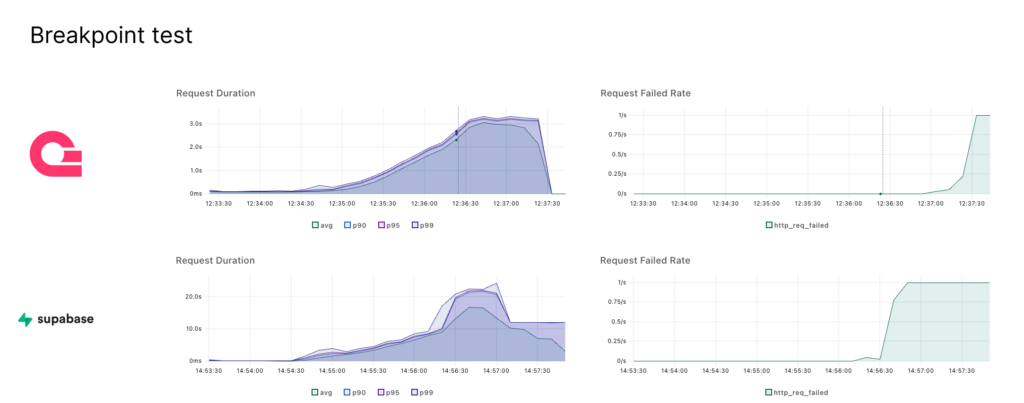

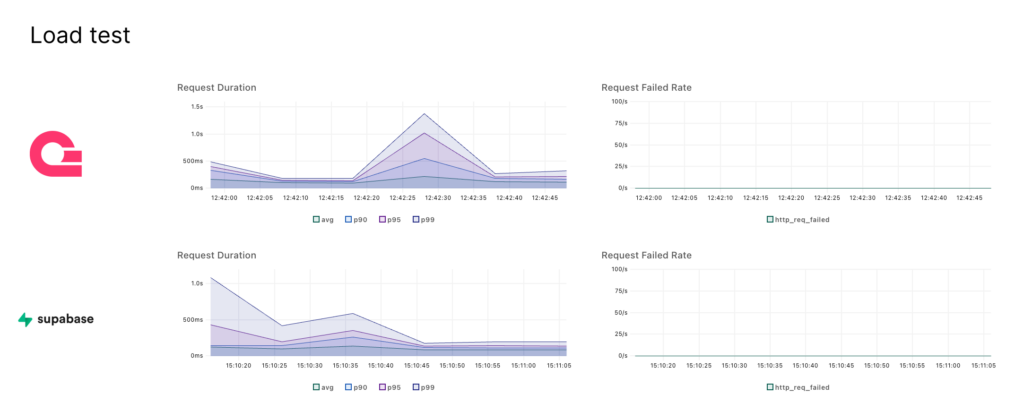

Finding breakpoint

k6 produces results and charts after the test (see repository for complete data). To identify the breakpoint, I will focus on the rising request failure rate ridge (Point 1). It coincides with the longest response durations(Point 2). Based on marked time frame, then I look at the VUs chart to determine how many users were active at the point of failure(Point 3).

That point doesn’t mean the server has frozen or stopped working entirely. It still serves responses, but it indicates the region where the first timeouts and critical errors occurs.

Be cautious when interpreting the requests per second (req/s) value. As more users send requests and the server responds in time, this value can increase. However, there is a tipping point where, even though the number of VUs continues to rise, the server can no longer process everything in time. At this point, the request rate may drop, even with more users arriving, indicating that the server is becoming overwhelmed.

As you may have noticed, even before reaching the breakpoint, the server’s responses can take up to 15 seconds. While this is better than a complete failure, it’s still far from providing a good user experience. That’s why I’ve added a load test with fixed thresholds.

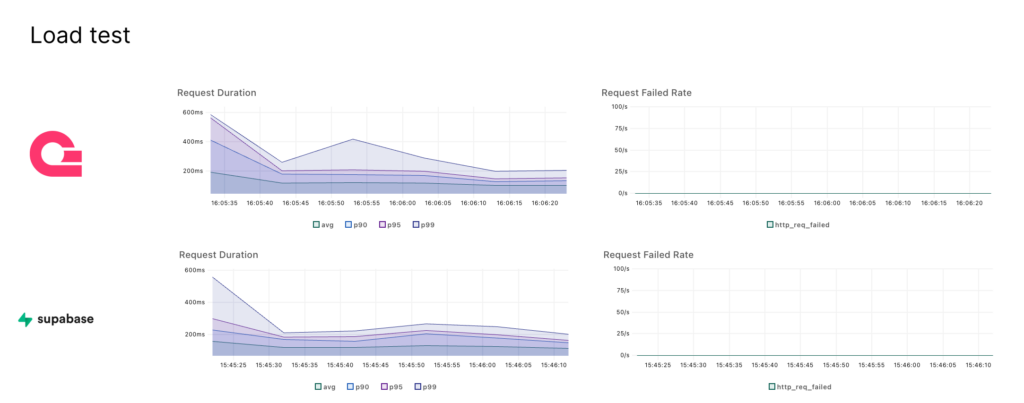

Finding comfort zone

To identify the maximum VUs for the server’s comfort zone, I manually run a test with constant VUs. If the thresholds are met, the value satisfies the limitations, which I can observe in the terminal output. I then manually increase the value until reaching the maximum acceptable limit. After that, I rerun the test, and if the results confirm it, I have identified the maximum VUs.

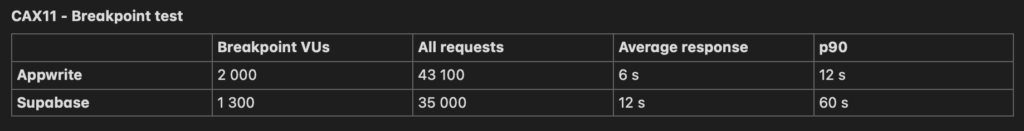

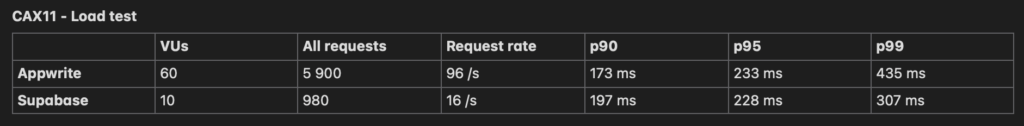

CAX11 – 2vCPU 4GB RAM

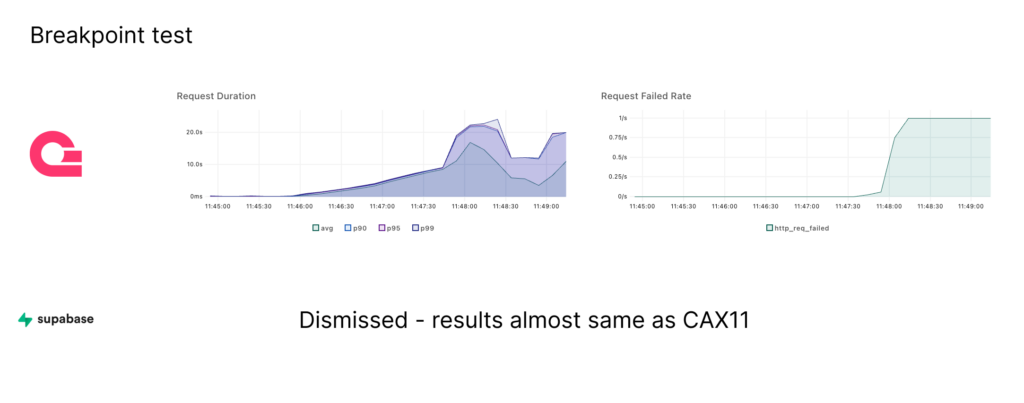

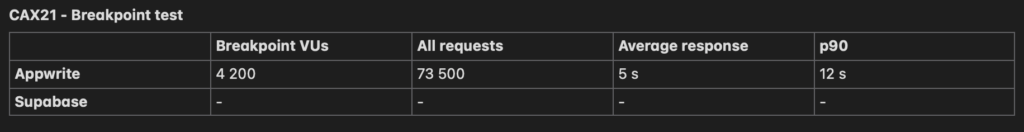

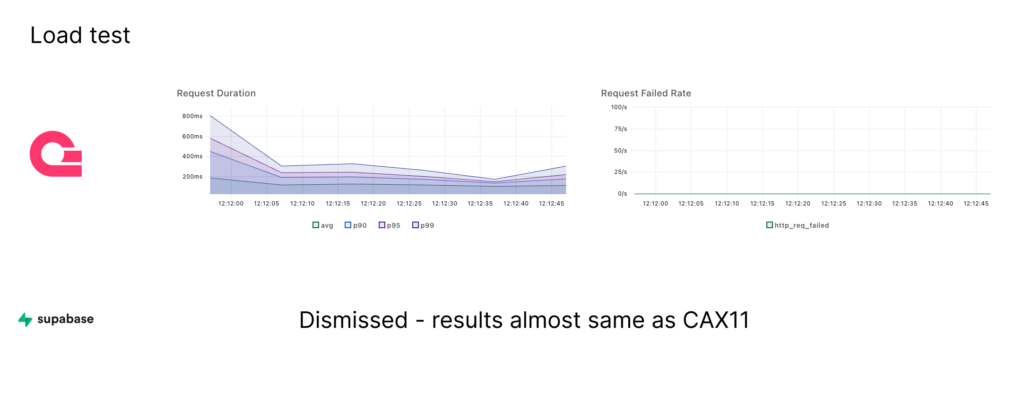

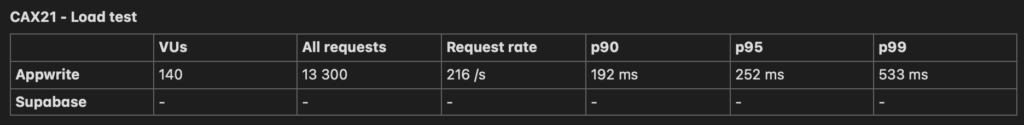

CAX21 – 4vCPU 8GB RAM

In this test, Supabase performed almost identically to the CAX11 instance, so I allowed myself to skip tests. The values varied by a maximum of about 10%.

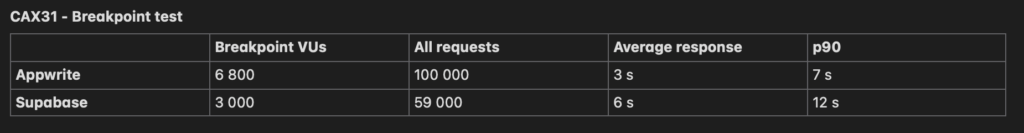

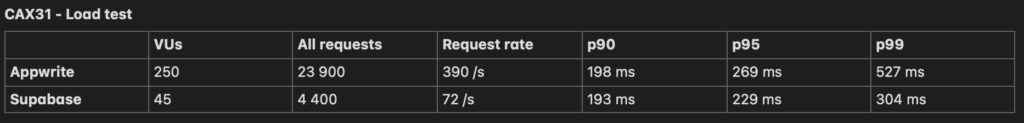

CAX31 – 8vCPU 16GB RAM

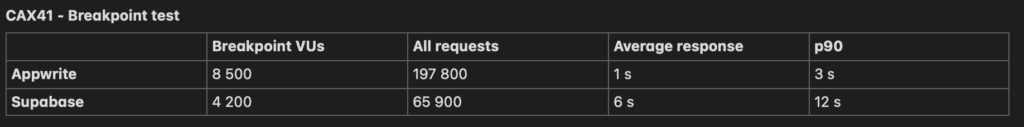

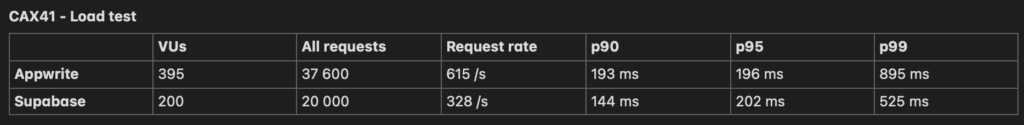

CAX41 – 16vCPU 32GB RAM

Cloud – Free Tier

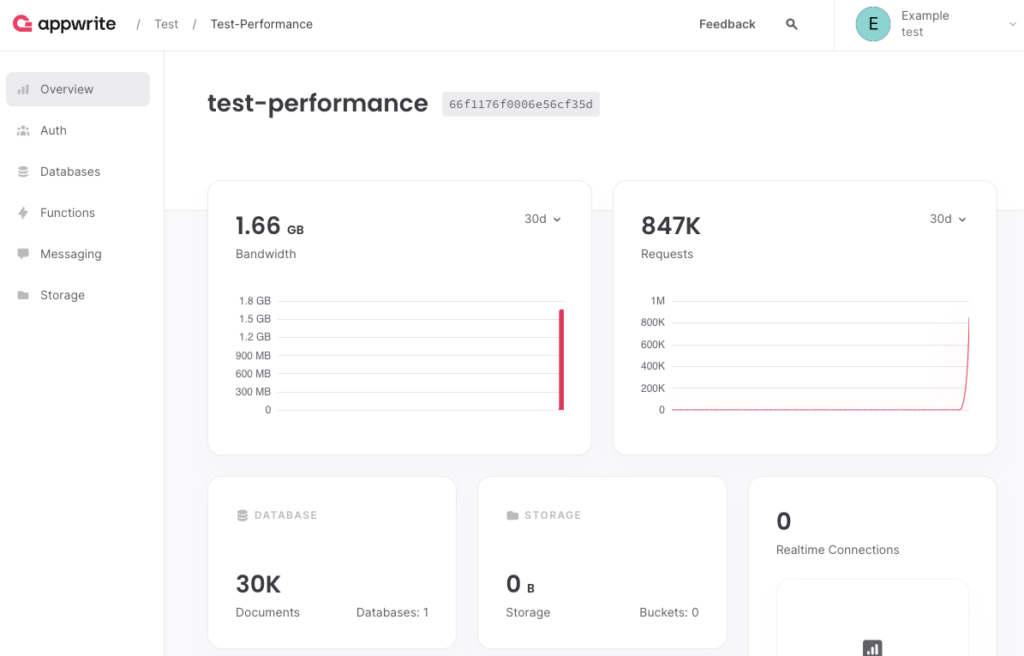

To increase data reliability, I created cloud servers in the same locations as the Hetzner vCPUs.

Summary and comparison

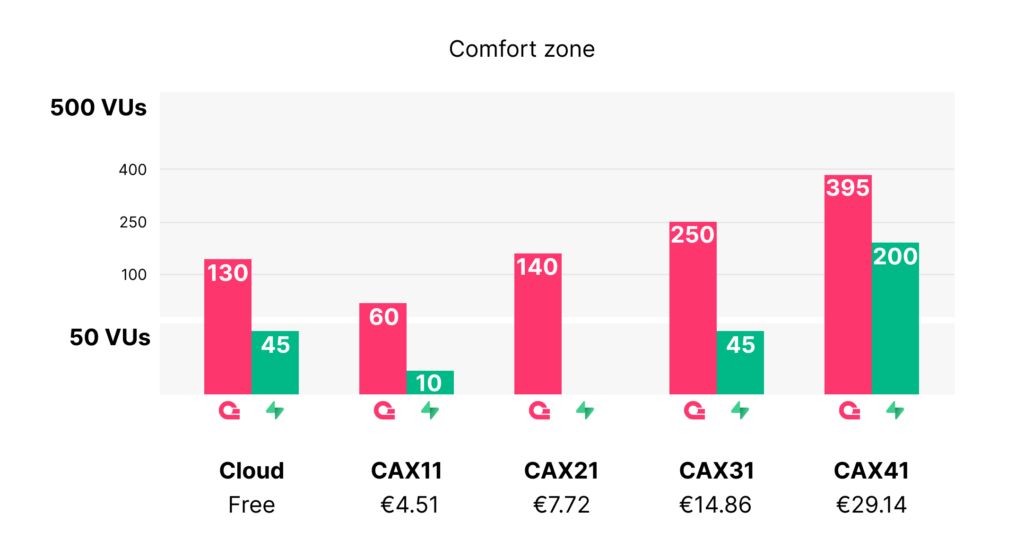

Comfort zone

The comfort zone refers to the number of VUs a server can handle simultaneously while maintaining acceptable performance, as defined by specific thresholds. As a reminder, the thresholds for a good user experience are:

- 90% of requests returned within 200ms

- 95% of requests returned within 500ms

- 99% of requests returned within 1000ms

- 99% of responses are successful (HTTP status 200)

In the comparison, Appwrite consistently performed better in maintaining this comfort zone, handling more VUs while keeping response times under these thresholds.

For instance, on a CAX31 instance, Appwrite comfortably supported 250 VUs with response times fitting within limits. In contrast, Supabase struggled to maintain similar performance, reaching its comfort zone with only 45 VUs.

These results highlight that Appwrite can support a significantly higher number of simultaneous requests, making it more scalable within these thresholds. Supabase, while still capable, reaches its comfort zone sooner, particularly when handling heavier loads.

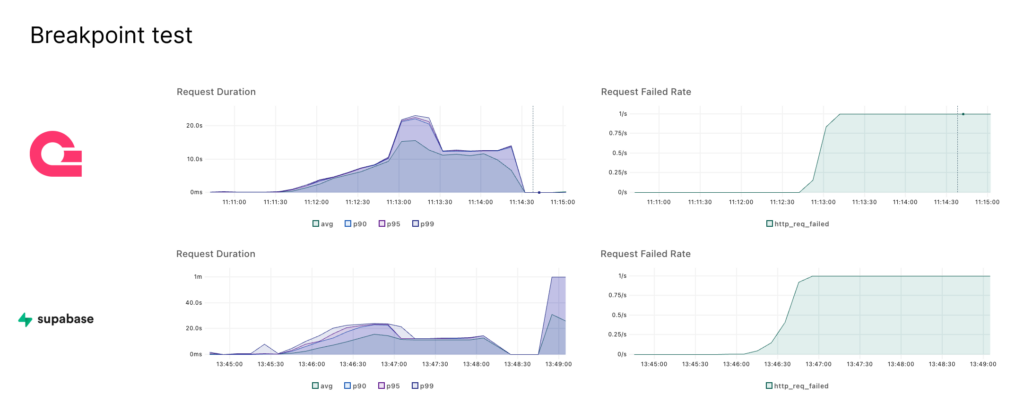

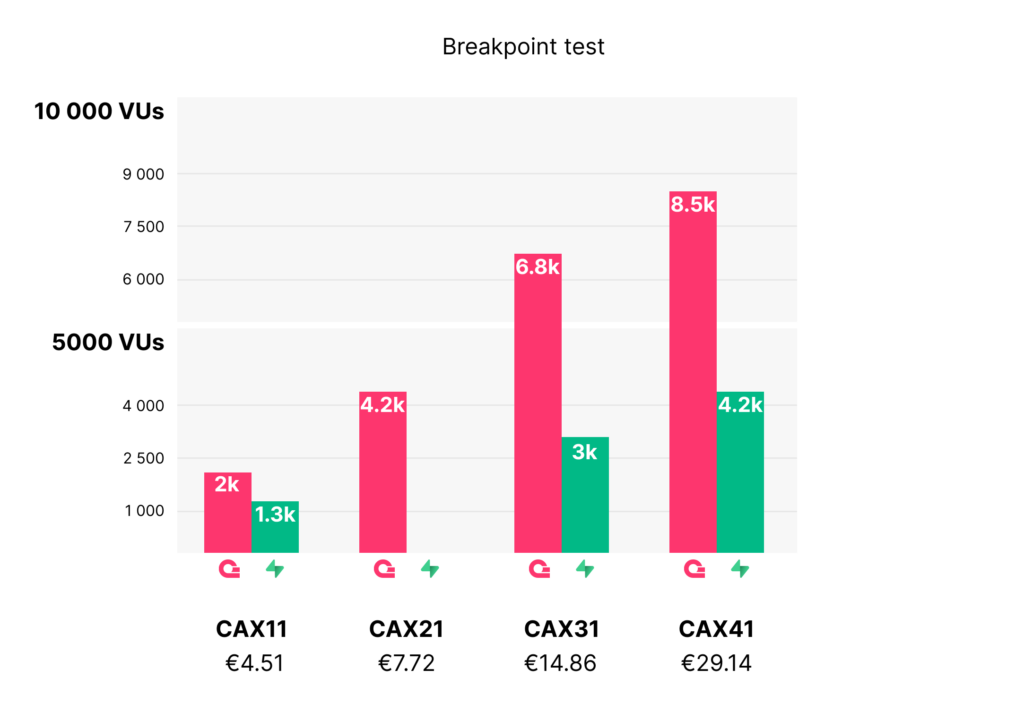

Breakpoint

The chart shows the point at which each platform reaches its limit in handling simultaneous VUs. This breakpoint is identified when the server starts to deliver failures or has timeouts.

In the tests, Appwrite consistently pushed higher limits. For example, on the CAX31 instance, Appwrite reached its breakpoint at 6,800 VUs, managing to process 100,000 requests with an average response time of 3 seconds. In comparison, Supabase reached its breakpoint much earlier, at 3,000 VUs, with 59,000 requests, an average response time of 6 second.

These results indicate that Appwrite can handle significantly larger loads before performance starts to degrade, while Supabase hits its breaking point much sooner.

Performance

When comparing the performance of Supabase and Appwrite, it becomes clear that Appwrite consistently handles larger loads more efficiently. In the tests, Appwrite demonstrated better scalability. In contrast, Supabase showed limitations under higher loads, particularly in the breakpoint tests, where it struggled to maintain stable performance beyond a certain threshold.

What’s notable is that the CAX31 instance—a self-hosted option—outperformed both cloud setups in terms of handling VUs and delivering faster response times. This highlights that opting for a self-hosted setup can provide better performance and scalability compared to relying on cloud environments, making it a more cost-effective and high-performance solution for demanding applications. This is important because self-hosted options don’t have limitations in terms of bandwidth, storage, or accounts. You can do whatever you need, as the server is efficient enough.

Another advantage of using self-hosted is the ability to combine instances for load balancing. This approach not only improves performance by distributing the load across multiple servers but also offers a cost-effective solution. By leveraging the power of the CAX31 for heavy tasks and offloading lighter or background processes to the CAX11, you can achieve better overall performance without breaking the budget. This combination provides an efficient way to scale while maintaining affordability.

Ease of use

When it comes to ease of use, both Supabase and Appwrite offer great SDKs right out of the box, making it simple to integrate their features into your applications. Both platforms also come with intuitive control panels that allow for easy customization and management of your backend services, whether you’re dealing with databases, authentication or other features.

However, Supabase can be more challenging to configure in a self-hosted environment, as it requires more setup and customization to get everything running smoothly. Additionally, several options in the Supabase panel are disabled or limited in self-hosted setups, which can restrict flexibility compared to its cloud version. Appwrite offers a more seamless self-hosted experience with fewer restrictions, making it a more straightforward choice for those prioritizing ease of use.

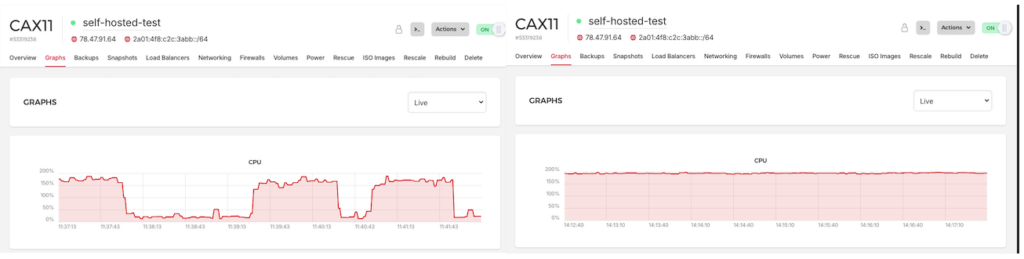

Idle consumption

For comparison, on the CAX11 instance, idle, Appwrite used about 30% of the server’s resources, while Supabase consumed a staggering 200%, continuously. It was so unrealistic that I couldn’t believe it at first, so I ran the tests again in the cloud – but the results were similar, confirming that’s just how it works.

Overall, Appwrite is lighter, faster, easier to configure, and more manageable. Supabase, on the other hand, is really only worth using in the cloud if you absolutely need a specific feature (like SQL).

Numbers

Imagine you have 50,000 eCommerce users per month.

- Let users be active mostly at 25 days a month, which gives us 2,000 users per day.

- Let most traffic be concentrated within 8 hours per day, so 2,000 / 8 = 250 users per hour.

- 250 users per hour is roughly 4 users per minute. To account for traffic spikes, let’s increase this to 20 users per minute.

This means you can manage your infrastructure with a CAX11 or CAX21 instance for as little as 5 euros per month, offering unlimited bandwidth and the ability to store a large number of files.

These values are based on load tests, which also indicate that the server will be capable of handling more users before reaching its breaking point, though response times will become longer as the load increases.

And remember, I ran tests with pretty demanding scenarios and still achieved over 60 VUs at one time on the cheapest instance using Appwrite.

Guidelines for you

- Start with Supabase or Appwrite cloud for small PoCs, using their free tiers

- Choose Appwrite for most cases due to better performance and being lightweight

- Choose Supabase if you need specific features it offers

- Supabase is harder to configure for self-hosting

- Supabase consumes more idle resources compared to Appwrite

- Avoid Supabase if you know you will migrate out of the cloud later

- You are easily about to exceed CAX41 pricing using AWS with less performance

- You can choose small instance for small queries and powerful one for database. Combine them freely

- You can achieve great performance with free tools out of the box. No need for Docker Swarm on AWS to kickstart a project

I generated in few hours.

Source Code

You can check the source to validate all the code and view the detailed reports recorded during the testing, presented as charts. These reports provide a clear visual representation of the server’s performance under different loads.

https://github.com/codigee-devs/supabase-appwrite-performance-testing

Extra

I also ran breakpoint tests on a bare Hetzner server to provide deeper insights into performance. For detailed results and a closer look at the tests, please visit the repository.

Get latest insights, ideas and inspiration

Take your app development and management further with Codigee

Let's make something together.

At Codigee, we value transparency, efficiency, and simplicity. No overengineering. No wasted time.

Just straight-up execution.

We are obsessed.

Every billion-dollar company started with one decision, one step, one iteration. The key? Taking action and executing fast.